ซื้อรถมือสอง ทำไมประกันถึงแพงกว่ารถมือหนึ่ง?

เหล่าสาวก “มือสอง” หลายท่านคงเคยเจอประสบการณ์ “อกหัก” กับดอกเบี้ยประกันรถยนต์ที่พุ่งสูงปรี๊ด ต่างจากรถป้ายแดงที่มักได้อัตราดอกเบี้ยสุดคุ้ม อะไรคือสาเหตุที่ทำให้ประกันรถมือสองนั้น “แพงกว่า”? บทความนี้จะพาทุกท่านไปไขข้อสงสัย พร้อมเผยเคล็ดลับคว้าประกันโดนใจ ดอกเบี้ยไม่บานปลาย ทำไมประกันรถมือสองถึงแพงกว่ารถมือหนึ่ง? วิธีการเลือกประกันรถมือสองให้เหมาะสม ข้อควรระวัง ประกันรถมือสองมีราคาแพงกว่ารถมือหนึ่ง เนื่องมาจากความเสี่ยงในการซ่อมแซม สถิติการเกิดอุบัติเหตุ มูลค่าของรถ และข้อมูลในการประเมินความเสี่ยง ผู้ซื้อควรเปรียบเทียบราคาและความคุ้มครองจากหลายบริษัท เลือกความคุ้มครองที่เหมาะสม พิจารณาเงื่อนไขต่างๆ และเลือกบริษัทประกันที่น่าเชื่อถือ

เทรดเงินตราต่างประเทศ ความเสี่ยงและผลตอบแทน

การ เทรดเงินตราต่างประเทศ หรือที่รู้จักกันทั่วไปในชื่อการซื้อขายฟอเร็กซ์ เกี่ยวข้องกับการผสมผสานระหว่างความเสี่ยงและผลตอบแทนที่อาจเกิดขึ้น การทำความเข้าใจความเสี่ยงและผลตอบแทน เป็นสิ่งสำคัญสำหรับเทรดเดอร์ในการตัดสินใจอย่างมีข้อมูลและจัดการพอร์ตการลงทุนอย่างมีประสิทธิภาพ ภาพรวมของความเสี่ยงและผลตอบแทนที่เกี่ยวข้องกับการซื้อขายฟอเร็กซ์ เรามีมาแนะนำกัน

เหตุผลที่ต้องต่อวีซ่าทำงาน

อาจจะกล่าวได้ว่าประเทศไทยเองก็เป็นอีกหนึ่งประเทศที่เป็นจุดหมายปลายทาง สำหรับคนที่อยากมีงานทำ ส่วนหนึ่งก็เนื่องจากว่าในประเทศไทยมีอาชีพที่หลากหลาย โดยงานเองก็มีจำนวนหลายต่อหลายด้านอีกด้วย ดังนั้นสิ่งที่บางคนสงสัยก็คือว่าเหตุผลในการต่อวีซ่าทำงานคือสิ่งใดบ้าง มาดูพร้อมกัน 1.ทำงานที่เหมาะสมกับตนเอง ต้องการทำงานนี้ต่อ เนื่องจากว่าวีซ่าทำงานในประเทศไทยจะอยู่ที่ 90 วัน ทำให้ไม่เพียงพอสำหรับการทำงานอย่างแท้จริง ดังนั้นจึงไม่คนต่างชาติหลายๆ คนเลยก็ว่าได้ที่ติดใจการใช้ชีวิตในประเทศไทยและอยากอยู่ทำงานต่อ เนื่องจากว่าประเทศไทยเป็นประเทศในฝันที่มีข้อดีหลายๆ ประการเลยก็ว่าได้ ไม่ว่าจะเป็นอาหารการกินที่อุดมสมบูรณ์ มีหลายแบบและหลายราคาด้วยกัน ไม่ว่าจะเป็นอาหารชาติใดก็สามารถหาซื้อได้ในประเทศไทยทั้งสิ้น จึงไม่น่าแปลกใจเลยที่หลายๆ คนจะเลือกทำงานในประเทศไทยเพราะว่าเป็นการทำงานที่เหมาะสมกับตนเอง ซึ่งเมื่อได้ลองทำไปแล้วก็เห็นได้ชัดเจนว่าเป็นงานที่ดีและน่าสนใจยิ่ง อาจจะกล่าวได้ว่าการต่อวีซ่าทำงานคือสิ่งที่เหมาะสมมากที่สุดเลยก็ว่าได้ 2.เหมาะสมกับคนที่ชอบสถานที่ท่องเที่ยว ไลฟ์สไตล์ในประเทศไทยเป็นไลฟ์สไตล์ที่โดดเด่นและลงตัวอย่างยิ่ง จึงไม่น่าแปลกใจเลยที่หลายๆ คนอยากจะเลือกสถานที่ท่องเที่ยวซึ่งตอบโจทย์สำหรับตนเองในทุกด้านด้วยกัน ไม่ว่าจะเป็นด้านของการเดินทาง ด้านของการท่องเที่ยว เมื่อทำงานครบถ้วนจนจบเวลาแล้วก็สามารถไปเที่ยวได้ ไม่ว่าจะเป็นในกรุงเทพมหานครหรือว่าต่างจังหวัดก็ได้ทั้งสิ้น อาจจะกล่าวได้ว่าการเลือกสถานที่ท่องเที่ยวที่ดีนั้นมีคุณค่าและความหมายอย่างมาก 3.นายจ้างพึงพอใจในตัวลูกจ้าง สิ่งที่ทุกคนเห็นพ้องต้องกันก็คือ บ่อยครั้งเลยที่นายจ้างพึงพอใจในตัวลูกจ้างเนื่องจากการหาคนทำงานที่มีคุณภาพไม่ได้หาได้ง่ายๆ นั่นเอง อีกทั้งคนที่มีความรับผิดชอบก็ยังหาได้ยากยิ่งอีกเช่นกัน จึงไม่น่าแปลกใจเลยที่นายจ้างหลายๆ คนจะเป็นฝ่ายทำเรื่องให้ลูกจ้างขอต่อวีซ่าทำงาน โดยอาจจะมีการว่าจ้างบริษัทที่สามารถดำเนินการด้านนี้ได้โดยเฉพาะ เหมาะสมกับการประหยัดเวลา เมื่ออยากจะให้ลูกจ้างได้ต่อใบอนุญาตเร็วๆ อีกด้วย และนี่ก็คือสิ่งที่หลายๆ คนอาจจะไม่เคยรู้มาก่อนเกี่ยวกับการเลือกสิ่งที่ดีๆ โดยเฉพาะอย่างยิ่งคนที่ต้องการขอต่อวีซ่าทำงานในประเทศไทย ขั้นตอนเองก็ไม่ได้ยุ่งยากหรือว่าลำบากแต่ประการใดเลยก็ว่าได้ เหมาะสมกับคนที่ต้องการให้การทำงานเป็นเรื่องที่ง่ายดายกว่าเดิมนั่นเอง ยิ่งการทำงานในประเทศไทยด้วยแล้ว หากมีใบอนุญาตก็จะใช้ชีวิตสะดวกมากกว่าเดิมแน่นอน

อยากเขียนเรซูเม่ สมัครงาน ให้ดูโปร ต้องมี 3 ข้อนี้

มาแนะนำข้อมูลดี ๆ ที่เกี่ยวกับนักศึกษาจบใหม่ หรือนักเรียนจบใหม่ที่กำลังฝึกหัดเขียนเรซูเม่ สมัครงาน ของตัวเองให้ดูปังกันอีกแล้ว ถ้าคุณอยากจะเพิ่มระดับความโปรในการเขียนเรซูเม่ของคุณให้มากขึ้น มีความโดดเด่นจนกระทั่งเข้าตากรรมการ ต้องมี 3 ข้อที่เรานำมาแนะนำกันนี้ เขียนเรซูเม่ สมัครงาน ให้ดูโปร อย่าลืม 3 ข้อนี้เด็ดขาด! มาดูกันดีกว่าว่าการเขียนสมัครงานให้แลดูมีความเป็นโปรมากขึ้น จะต้องประกอบด้วยองค์ประกอบอะไรบ้าง? 1. ดึงความสนใจ ไปที่ความสำเร็จของคุณ ให้คุณเลือกความสำเร็จที่สำคัญที่สุด 3 – 4 อันดับแรกของคุณ ที่คุณเคยผ่านประสบการณ์มา หรือผ่านการเทรนมาไว้ที่หัวข้อแรก ๆ เช่น…คุณเคยมีประสบการณ์เป็นครูอาสาขึ้นไปสอนน้องที่บนดอย หรือเคยมีประสบการณ์เวิร์คแอนด์เทรเวลในระยะเวลาสั้น ๆ สิ่งที่เป็นความสำเร็จของคุณที่คุณรู้สึกภาคภูมิใจ ให้นำมาใส่ไว้ตรงจุดนี้ 2. รวมหัวข้อที่คล้ายกันไว้ด้วยกัน อย่าทำให้เรซูเม่รก! ยกตัวอย่างเช่น คุณต้องการใส่ข้อมูลสรุปประวัติย่อ หรือวัตถุประสงค์ของประวัติย่อ ให้คุณเลือกใส่อย่างใดอย่างหนึ่ง คุณไม่ควรใส่ทั้ง 2 อย่าง และถ้าคุณเพิ่งเรียนจบมาหมาด ๆ และเพิ่งสมัครงานครั้งแรก ยังไม่เคยมีประสบการณ์ในการทำงานมาก่อนเลยแม้แต่ครั้งเดียว อย่าใส่ประวัติการทำงานที่ว่างเปล่าลงไปเด็ดขาด แต่คุณอาจแทนที่ หัวข้อของประสบการณ์ที่เกี่ยวข้องหลักสูตรที่เกี่ยวข้อง ผลสัมฤทธิ์ทางการเรียน และประสบการณ์อื่น ๆ […]

ปลดล็อกสกิลเพิ่มรายได้ปี 2023 เพื่อมนุษย์เงินเดือนโดยเฉพาะ

หากคุณคือมนุษย์เงินเดือนคนหนึ่งที่มีความฝัน อยากมีบ้าน มีรถ หรือสิ่งของในฝันเป็นของขวัญปีใหม่ให้กับตัวเองและครอบครัว หรือใครที่มีกำลังผ่อนบ้าน ผ่อนรถ ผ่อนโทรศัพท์มือถือ เฟอร์นิเจอร์ หรือข้าวของเครื่องใช้อยู่ แล้วอยากจะปลดภาระค่าใช้จ่ายในส่วนนี้ให้หมดในเร็ววัน เราอยากชวนคุณมาตั้งเป้าหมายในปี 2023 นี้ และวางแผนทำความฝันนี้ให้สำเร็จไปพร้อม ๆ กัน ด้วยการหารายได้เพิ่มโดยที่ไม่กระทบกับเวลาการทำงานประจำของตัวเอง ซึ่งจะมีวิธีไหนบ้าง ไปดูกันเลย 1. รับทำข้าวกล่องและขนม หากคุณเป็นคนมีฝีมือในการทำอาหารหรือขนม การรับทำข้าวกล่องหรือขนมตามออเดอร์ก็จะช่วยให้คุณมีรายได้เพิ่มอีกหนึ่งช่องทาง แถมไม่ต้องลงทุนสูง ไม่จำเป็นต้องมีหน้าร้าน สามารถทำในคอนโดหรือครัวในบ้านได้เลย โดยอาจจะเริ่มต้นจากการรับทำตามจำนวนออร์เดอร์เล็ก ๆ ก่อน เช่น ข้าวกล่องมื้อเช้าหรือกลางวันของเพื่อน ๆ ในออฟฟิศ งานประชุมภายในแผนก งานเลี้ยงภายในแผนก เพื่อจะได้ไม่ต้องตุนวัตถุดิบมากมายนัก เมื่อเริ่มทำคล่อง จัดการออเดอร์ได้อย่างไม่สะดุดแล้ว ก็ค่อย ๆ ขยับขยายเพิ่มจำนวนเปิดรับออร์เดอร์ที่เยอะขึ้นได้ หรืออาจจะเพิ่มทางเลือกของเมนูเข้าไป เช่น ขนมคลีน อาหารคลีน เป็นเมนูเพื่อสุขภาพ ที่สามารถสร้างรายได้เพิ่มได้ดีมากอีกด้วย 2. รับหิ้วสินค้า ตลอดทั้งปีจะมีการจัดโปรโมชันลดราคาสินค้า ยิ่งช่วงใกล้งานเทศกาลปีใหม่แบบนี้ แน่นอนว่าสินค้าแบรนด์ต่าง ๆ พากันจัดมหกรรมลดราคาสินค้ากันแทบทุกแบรนด์ แต่ใช่ว่าทุกคนจะมีเวลาเดินทางไป […]

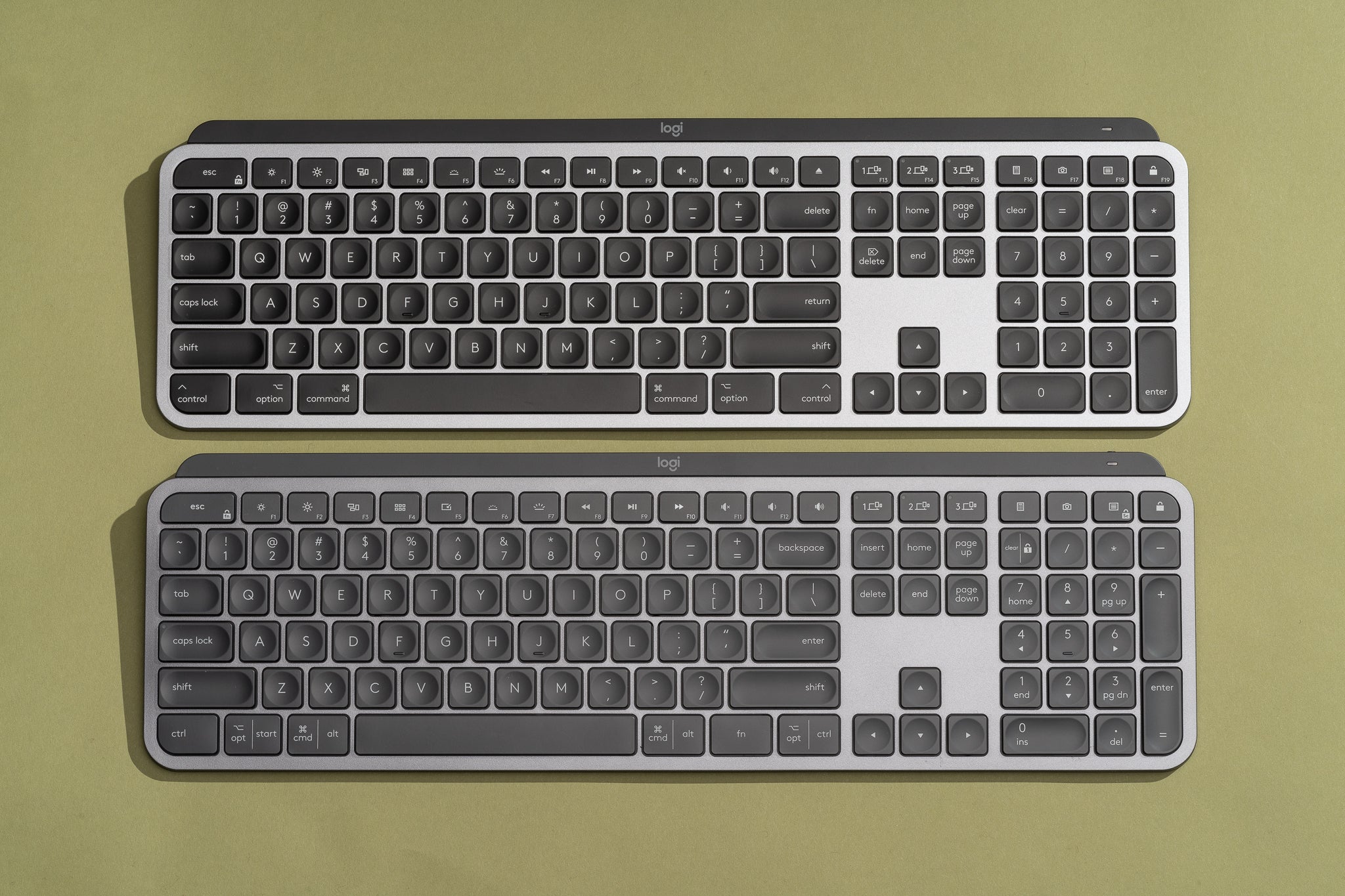

มาเพิ่มความสะดวกสบายในการทำงานด้วยคีย์บอร์ดไร้สายกันดีกว่า

ในปัจจุบันโลกของเราพัฒนาไปไกล เทคโนดลยีต่างๆ ได้พัฒนาขึ้นมาเพื่อเพิ่มความสะดวกสบายให้กับมนุษย์ มองง่ายๆ อย่างเรื่องของคอมพิวเตอร์ ที่เมื่อก่อนจะต้องมีอุปกรณ์มากมาย ตอนนี้ก็มีทางเหลืออย่างโน็ตบุ๊คที่มีระบบปฏิบัติการเช่นเดียวกับคอม แต่พกพาสะดวก มีแค่เครื่องเดียวก็พกไปทำงานได้ทุกที่อุปกรณ์คอมพิวเตอร์ก็เช่นเดียวกัน จากเมื่อก่อนที่จะต้องมีสาย USB เพื่อเชื่อมต่อ เดี๋ยวนี้แค่เปิดบลูทูธก็ใช้งานได้แล้ว วันนี้จะพาไปเพิ่มความสะดวกในการทำงานด้วยคีย์บอร์ดไร้สายกันเลย จะมียี่ห้อไหนมาแนะนำบ้างนั้น ดูกันเลย Royal Kludge RK68 RGB สายหวาน สายสีรุ้งต้องชอบมากแน่นๆ เพราะน้องมาพร้อมไฟ RGB สีสันสวยงาม สามารถปรับรูปแบบของไฟได้ กว่า 18 Mode เลยทีเดียว เป็นแบบ Mechanical คีย์บอร์ด ที่สามารถเปลี่ยนปุ่ม เปลี่ยนสวิตช์ได้ รองรับการเชื่อมต่อทั้ง PC, Notebook, Tablet และ Smartphone ทั้งระบบ Android และ iOS เลย Logitech MX Keys Advanced Wireless คีย์บอร์ดแบรนด์ดังที่ใครๆ ก็อยากได้ รุ่นนี้จะมีให้เลือกทั้งแบบปกติและแบบ mini […]

Application สำหรับนักอ่านนิยาย

ในโลกของอินเตอร์เน็ตและเทคโนโลยีที่ทันสมัย ทำให้ทุกอย่างเริ่มเปลี่ยนไป หลายอย่างย้ายเข้ามาอยู่ในมือถือแค่เครื่องเดียว ไม่ว่าจะเป็นการสื่อสาร ข่าวสารต่างๆ หรือแม้แต่การอ่านหนังสือ เดี๋ยวนี้การอ่านสามารถเกิดขึ้นได้ทุกที่ ทุกเวลา แบบไม่จำเป็นต้องมีหนังสือเล่มอยู่กับตัวอีกต่อไป เพียงแค่เรามีแท็บเล็ตหรือสมาร์ทโฟน ก็สามารถหาความรู้จากการอ่านกันได้แล้ว ไม่ว่าจะเป็นการอ่านหนังสือเพื่อสอบ หรือการอ่านนิยายก็เช่นกัน เดี๋ยวนี้มีแอปพลิเคชั่นมากมายที่เราสามารถอ่านนิยายได้อย่างหลากหลาย ดังนี้ Joylada นิยายแชทที่ได้รับความนิยมเป็นอย่างมาก เพราะมีความสนุกสนานและเสมือนคนคุยกันจริงๆ บทบรรยายมีน้อยแต่เข้าใจมาก เป็นแอปนิยายที่เปิดตัวด้วยรูปแบบแชท และต่อมาคนเขียนสามารถแต่งบทบรรยายเพิ่มเข้าไปได้ด้วย จอยลดามีหมวดหมู่นิยายให้เลือกอยู่เยอะเช่นกัน ไม่ว่าจะเป็นถนนสีชมพู (รัก โรแมนติก) ทะเลสีเทา (ดราม่า อกหัก) ดินแดนมหัศจรรย์ (แฟนตาซี) ลานฆาตกรรม (สืบสวน สอบสวน) ท่าเรือสีรุ้ง (วาย) โกดังแฟนคลับ (แฟนฟิคชั่น) เป็นต้น โดยใน 1 เรื่องอาจมีทั้งนิยายแชทและบทบรรยายปนกันไป ในแอปพลิเคชั่นนี้เรื่องส่วนมาจะเป็นการแต่งแฟนฟิกชัน ReadAWrite แอปอ่านนิยายน้องใหม่ที่กำลังเป็นที่สนใจให้กลุ่มคนรักการอ่าน โดยมีให้เลือกทั้งนิยายแชทและบทบรรยายเช่นเดียวกับจอยลดา แต่เพิ่มเติมคือมีหมวดหมู่บทความให้ความรู้และการ์ตูนด้วย ทำให้เนื้อหาค่อนข้างหลากหลายทีเดียว ธัญวลัย เป็นเว็บอ่านนิยายเครือเดียวกับจอยลดา เดิมทีธัญวลัยจะเป็นแพลตฟอร์มนิยายที่อยู่แค่บนเว็บไซต์เท่านั้น แต่ต่อมาต้องมีการพัฒนาเพื่อให้ตอบโจทย์การอ่านได้ทุกที่ทุกเวลาเลยมีแอปออกมา […]

Application ฟังเพลงบนสมาร์ทโฟน

กิจกรรมที่อยู่กับคนเรามาช้านานก็คงจะเป็นการฟังเพลง ที่สามารถสร้างได้ทุกโหมดอารมณ์ ไม่ว่าจะสุข จะเศร้า จะเหงา หรือจะเบื่อ เพลงก็ยังเป็นสิ่งที่ช่วยผ่อนคลายทำให้ความรู้สึกต่างๆ นั้นบาลานซ์กันยิ่งขึ้น ในการฟังเพลงของคนเราก็มีการพัฒนาอยู่เสมอ ตั้งแต่การเป็นเทปคาสเซ็ต มาจนเป็นเครื่องเล่น MP3 จนกระทั่งเข้ามาอยู่บนสมาร์ทโฟนอย่างแอปพลิเคชั่น ที่สามารถเล่นได้ทุกที่ทุกเวลา และมีเพลงให้เลือกมากมายทั่วโลก ถือว่าสะดวกสบายเป็นอย่างมาก ซึ่งวันนี้จะมาแนะนำแอปพลิเคชั่นฟังเพลงบนสมาร์ทโฟนกันเลย YouTube ถือว่าเป็นแอปฯยุคแรกๆ ที่คิดไม่ออกบอก YouTube โดยแอปฯนี้สามารถค้นหาเพลงได้อย่างหลากหลาย ซึ่งบริษัทหรือค่ายเพลงต่างๆ ก็ใช้ YouTube ในการโปรโมทเพลง ซึ่งในการฟังเพลงทุกเพลงนั้นคุณสามารถฟังได้ฟรีๆ แต่อาจจะมีโฆษณาขั้น ซึ่งต่อมาก็มีการเปิดตัว บแอป YouTube Music ที่เพิ่งเปิดให้บริการในไทยกันได้ไม่นาน ตัวแอปใช้งานได้อย่างง่ายดายเพราะมีการออกแบบการใช้งานให้ใกล้เคียงกับแอป YouTube ตัวแอปสามารถสลับไปมาระหว่างเพลงและ MV ได้ตลอดเวลา และยังสามารถดาวน์โหลดเก็บไว้ฟังได้ทั้งเพลงและ MV อีกด้วย JOOX สาวกเพลงไทยต้องชอบแน่นอน เพราะมีเพลงให้เลือกอย่างหลากหลายทั้งเก่าและใหม่ ซึ่งในเพลงก็จะมีทั้งเนื้อเพลง ทั้ง MV และคาราโอเกะต่าง ๆ แล้ว เมื่อไม่นานมานี้ joox เพิ่งเปิดตัวฟีเจอร์ใหม่อย่าง Karaoke Duet […]

เคลียร์ชัด ๆ ค่าเบี้ยประกันรถยนต์ ชั้น 1 ที่ต้องจ่ายทุกปี ให้ความคุ้มครองอะไรบ้าง?

การซื้อประกันรถยนต์ ถือเป็นราคาจ่ายที่ให้ความอุ่นใจให้กับผู้ขับขี่ได้เป็นอย่างดี และหลายคนก็เลือกที่จะจ่ายเบี้ยประกันรถยนต์ ชั้น 1 ที่ราคาสูงกว่าประกันภัยชั้นอื่น ๆ เพราะต้องการความอุ่นใจขั้นสูงสุด โดยไม่ศึกษารายละเอียดอื่น ๆ ให้ดี ว่าประกันประเภทนี้ให้ความคุ้มครองเรื่องอะไรบ้าง? หากคุณเป็นมือใหม่ที่อยู่ในช่วงที่กำลังตัดสินใจซื้อกรมธรรม์ แนะนำให้ดูก่อนว่าประกันชั้น 1 คุ้มครองอะไร และตอบโจทย์ความต้องการของคุณได้ดีจริงหรือไม่ เพื่อให้การเลือกซื้อมีประสิทธิภาพมากที่สุด จ่ายเบี้ยประกันรถยนต์ ชั้น 1 สูงกว่า ได้รับความอุ่นใจมากกว่าจริงไหม? เชื่อว่าหลายคนคงอยากรู้แล้วว่า เบี้ยประกันรถยนต์ ชั้น 1 ที่ต้องจ่ายในแต่ละปี ให้ความคุ้มครองในเรื่องใดบ้าง และเราก็ไม่ปล่อยให้คุณต้องรอนาน ได้รวบรวมรายละเอียดมาให้คุณเรียบร้อยแล้ว ไปดูกันเลย! 1. ความเสียหายที่เกิดขึ้นกับตัวรถที่ทำประกัน นอกจากประกันภัย ชั้น 1 จะให้ความคุ้มครองความเสียหายที่เกิดขึ้นกับรถคันที่เอาประกันแล้ว ยังรวมไปถึงอุปกรณ์ตกแต่งรถที่เพิ่มเข้ามาด้วย ไม่ว่าจะเป็นรถของผู้เอาประกันก็ดี หรือรถของคู่กรณีก็ดี แถมยังรวมถึงอุบัติเหตุที่เกิดแบบไม่มีคู่กรณี รถหาย ไฟไหม้ และน้ำท่วมอีกด้วย 2. บุคคลภายนอก เบี้ยประกันรถยนต์ ชั้น 1 ให้ความคุ้มครองต่อทรัพย์สิน ร่างกาย และชีวิตของคู่กรณี รวมถึงบุคคลภายนอกที่ได้รับความเสียหาย จากการเกิดอุบัติเหตุของรถที่ทำประกัน […]

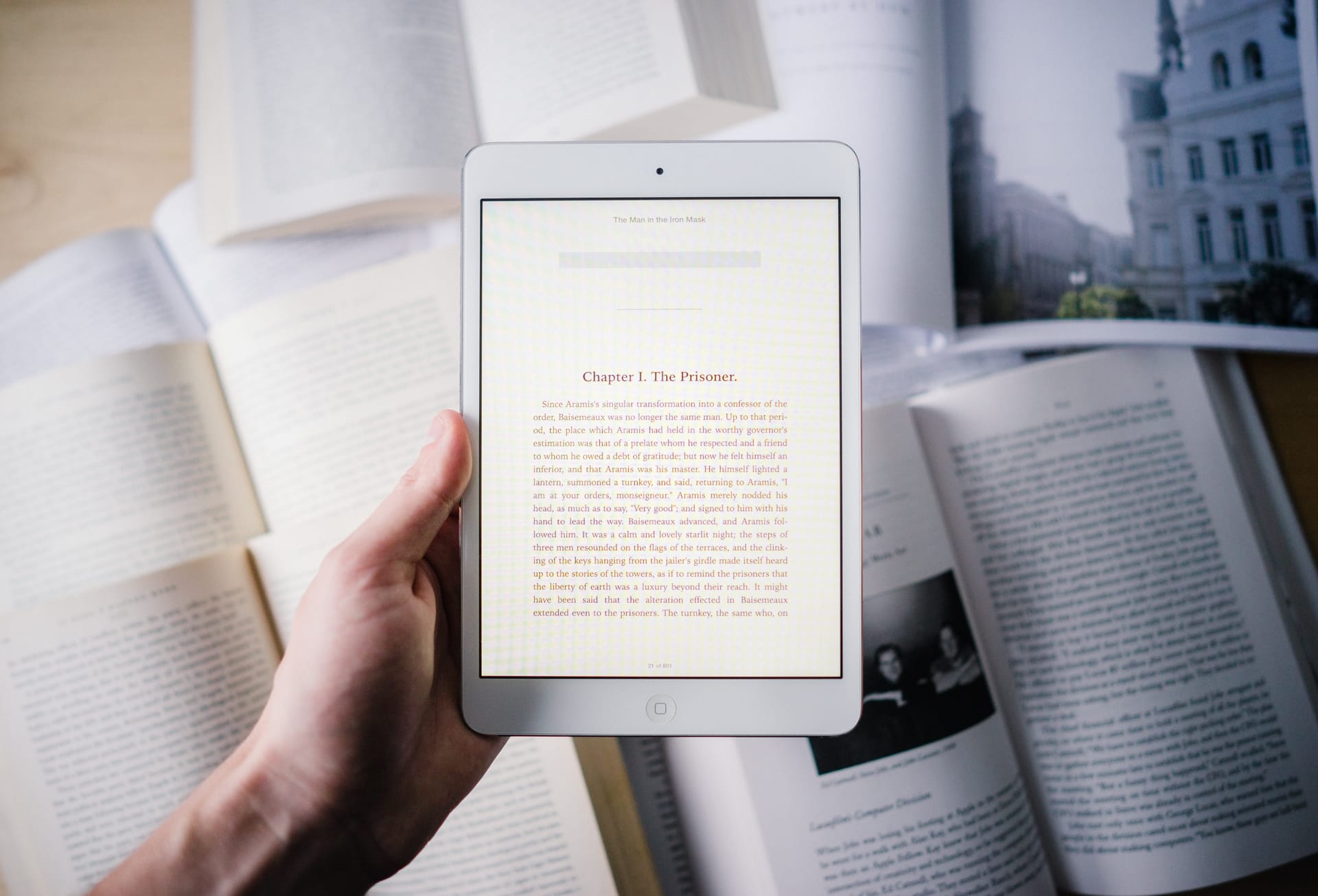

iPad กับการเรียน

ปฏิเสธไม่ได้เลยว่าในปัจจุบันเทคโนโลยีได้เข้ามามีบทบาทในการใช้ชีวิตประจำวันของผู้คนเป็นอย่างมาก ว่าจะเป็นเรื่องของข่าวสาร ความบันเทิง การใช้ชีวิต การทำงาน แม้กระทั่งการเรียน ที่เมื่อก่อนมีเพียงสมุดปากกาก็สามารถเรียนรู้ได้ แต่เดียวนี้บางสาขาวิชา บางมหาลัยจำเป็นจะต้องมีเครื่องมออุปกรณ์ต่างๆ สำหรับกาเรียนการสอน ซึ่งวันนี้จะยกตัวอย่าง iPad ที่ในปัจจุบันมีความเกี่ยวข้องกับการเรียนการสอนเป็นอย่างมาก มาดูกันว่า iPad มีบทบาทและมีประโยชน์ต่อการเรียนอย่างไรบ้าง ไม่ต้องแบกหนังสือเรียนหนักๆ เมื่อก่อนจะไปโรงเรียนทีก็สะพายกระเป๋าแบบหลังแอ่น พอไปถึงโรงเรียนก็พบว่าหนังสือที่จะต้องใช้ดันลืมเอามา แต่ถ้ามี iPad จะไม่พบปัญหาเหล่านี้อีกเลย เพราะทั้งหมดจะย่อมาอยู่ใน iPad เพียงเครื่องเดียวเท่านั้น ที่จะเป็นทั้งปากกาหลายหลากสี ดินสอหลายแบบ หน้ากระดาษสวยๆ ที่เลือกลายเองได้ แถมจะก๊อปปี้แชร์ไฟล์ผ่านทางไลน์หรืออัปโหลดผ่านช่องทางอื่นๆ ให้กี่คนก็ได้ มีเครื่องมือสำหรับการออกแบบ ใครที่เรียนสายออกแบบก็จะมีความชื่นชอบเป็นอย่างมาก เพราะใน iPad จะมีฟีเจอร์สามารถวาดรูปได้เหมือนวาดกับกระดาษเลยทีเดียว แถมยังไม่ต้องพกสีให้เปื้อนมือเปื้อนกระเป๋า วาด 1 รูป แล้วไม่ต้องวาดซ้ำให้เหมือนเดิม สามารถก็อปปี้แล้ววางก็จะได้รูปภาพที่เหมือนเดิมไม่ผิดเพี้ยนไปเลย ทำเอกสารหรือ Presentation ง่ายๆ สำหรับการทำงานเอกสารหรือการทำพรีเซ็นท์ เมื่อก่อนก็คงจะต้องหอบคอมพิวเตอร์พกพาอย่างโน็ตบุ๊กไปในทุกที่ ทั้งสายชาร์จ ทั้งเครื่อง ทั้งเมาท์ เป็นยายป้าหอบฟางกันเลยทีเดียว แต่ในสมัยนี้มีแค่ iPad เครื่องเดียวก็สามารถทำ Presentation […]